Before processing the details of a scene, humans perceive their global layout properties - also referred to as their “spatial envelopes.” This work demonstrates that the GIST features and a cluster-weighted regression model can be used to accurately predict human perception of the depth, openness, and perspective from single images of natural and urban scenes. This work is described in “Estimating perception of scene layout properties from global image features” by Michael G. Ross and Aude Oliva, published in the Journal of Vision.

| near | open | perpendicular |

|

|

|

| depth | openness | perspective |

|

|

|

| far | closed | parallel |

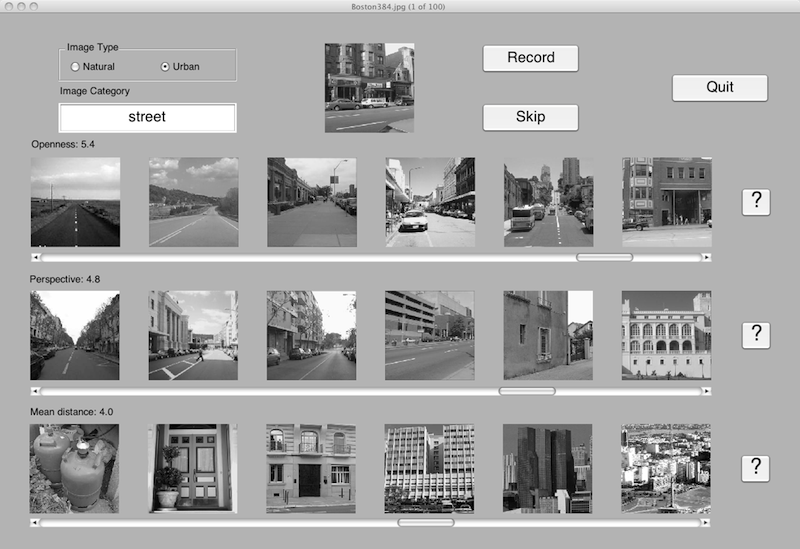

To understand and predict human perception of these properties, we conducted an experiment in which 14 human observers rated these properties on 7,138 images, using this interface.

The human ratings, the PCA GIST features for each image, and the PCA GIST basis vectors are available here. This much larger download contains color versions of all the images (though the experiments and results in the paper only used grayscale versions). Depth ratings range from near (1) to far (6), openness ratings range from open (1) to closed (6), and perspective ratings range from perpendicular (1) to parallel (6). Please see the paper for more details.

The method of predicting human ratings described in "Estimating perception of scene layout properties from global image features" by Michael G. Ross and Aude Oliva (Journal of Vision, 2010), is based on the GIST features developed by Oliva and Antonio Torralba. The regression functions are trained and computed using implementing cluster-weighted models, as described in "The Nature of Mathematical Modeling" by Neil Gershenfeld (1999). That code is available here. Regression used the first 24 principal components of the GIST features on a grayscale version of each image. The number of clusters was selected using cross-validation on the training sets, please see the paper for more detail.